Big tech companies are choosing the peninsula to locate their key infrastructures, whose resource consumption is skyrocketing thanks to AI. This is the map of the main projects already in operation and those yet to come.

2024 has been the year of confirmation of Spain as a destination in which to locate the guts of the internet. Microsoft announced in February that it will expand its three data processing centres in Madrid and build another three in Aragon.

Amazon wants to considerably improve the power of the three it already had in that region . Added to these projects is the large Meta campus in Talavera de la Reina , whose construction has been given the green light this week by the Junta de Castilla-La Mancha, and many other developments by smaller companies.

The rise of digital services and the rush for artificial intelligence (AI) have meant that the volume of data being processed and stored every day continues to increase. To make this possible, more and more data centres are needed, the facilities where all this happens.

Spain has established itself as a good place to locate them: the price of energy is comparatively cheap, there is a lot of capacity to generate renewable energy, the fibre optic infrastructure is good and there is a direct connection with America through submarine cables, which will soon channel 70% of transatlantic internet traffic.

Reactions to this frenzy of data centre construction in Spain are mixed. On the one hand, hyperscalers (as the largest ones are known), usually located in sparsely populated areas, entail large investments in the territories where they are installed, generate employment (although the most qualified positions tend to be for foreigners) and attract more technology companies with related activities.

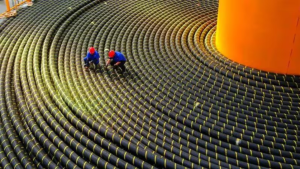

That’s the friendly side. The other is that this type of infrastructure consumes large amounts of energy and water . Data centres are large industrial warehouses full of racks , a kind of towers of processors that look like futuristic refrigerators.

The systems work night and day, so they need a lot of electricity. Especially if they are dedicated to training artificial intelligence (AI) models: it is estimated that GPUs, the processors used for these tasks, consume up to ten times more than a CPU, the ones used in conventional tasks.

And generative AI, the one responsible for ChatGPT or Gemini, has already arrived at Spanish computing centres, as EL PAÍS announced yesterday .

All those computers working together 24 hours a day generate a lot of heat. One of the most commonly used systems to cool them is spraying water into the rooms to cool the environment. This water must also be drinkable, since if it contains impurities, it can damage the servers.

There are more modern methods that do not involve the use of water, although they increase energy consumption. Data4’s in Alcobendas, for example, is based on mechanical compression, similar to that of an air conditioner or refrigerator. “Our water consumption is 0,” says a company spokesperson.

In Spain there are around a hundred data centres, according to the business association Spain DC. It is striking that not even the employers’ association itself knows the exact figure: this hundred is the result of an estimate made based on the information provided by its associated companies, which often do not say whether or not they have data centres or how many, and what Big Tech declares .

EL PAÍS has compiled a map of the data centres already in operation that are known and the main projects announced, excluding installations with less than 10 MW of power.

Size is precisely the first element to take into account. In the sector, a distinction is made between data centers that are operated by companies that offer their computing and data storage capacity to third parties, and those that belong to a single company, such as Microsoft, Amazon or Meta.

The latter are usually larger than the rest. Those that have an installed capacity equal to or greater than 50 MW are known as hyperscalers.

The million dollar question: how much do they consume?

It is not easy to find out what energy and water consumption these facilities have. Companies are not obliged to communicate this data and, in some cases, they do not even acknowledge where their facilities are located, citing security reasons.

EL PAÍS has contacted the main technology companies present in Spain to find out how many resources they consume and their response is usually the same: they refer to the official newspapers of the autonomous communities in which they operate, where the project specifications are published.

Extracting information from there poses an additional problem: the documentation provided to the authorities specifies the installed power of the centres (that is, the maximum energy they can consume), but does not indicate what the actual or usual consumption is.

This makes it almost impossible to get an accurate picture of the consumption of data centres in Spain. For example, in the case of the three Microsoft data centres in the Community of Madrid, which are already in operation but not 100% complete, we only know the maximum power they may need, not the operating power.

The aggregate data handled by Spain DC is 178 MW of actual power throughout the country at the start of 2024, although they themselves recognise that this may be too low. However, their figure is 19.5% higher than that of 2023, which confirms the upward trend.

On the other hand, there are large technology companies that process data in Spain, but not in their own facilities. Google, for example, opened the Madrid Cloud region two years ago (it has 32 in the world), which is what it calls the infrastructure with which it offers low-latency cloud computing services.

Data management is done with Google hardware and software , but in third-party facilities. In other words, Google does not have data centers in Spain, although it offers its own services for this type of infrastructure.

There is no data, however, on the amount of water they consume. To obtain an estimate of this magnitude, EL PAÍS has reviewed the specifications for the projects of the large data centres that have been built and planned.

The problem is the same: the available figures refer to the maximum permitted consumption, not the actual consumption.

Distribution in Spain

The largest of those being prepared by Big Tech will be the one to be built by Meta in Talavera de la Reina. It will have an installed capacity of 248 MW and will consume up to 504 million litres of water per year.

The initial figure was higher, but the company decided to correct it downwards after the Tajo Hydrographic Confederation questioned in a report whether there was capacity to meet these needs. According to sources familiar with the project, this correction has to do with the commitment to a cooling system based on dry air.

After Meta, the most resource-intensive data centres will be those built by Amazon (AWS, the company’s cloud computing subsidiary) in Aragon. According to the technical information contained in the specifications, the facilities in Huesca, Villanueva de Gállego and El Burgo de Ebro will have exactly the same characteristics: an installed capacity of 84 MW and a water consumption of 36.5 million litres per year.

According to company sources, the three availability zones are approximately 30 kilometres from each other to ensure good latency and to allow that, if one fails, the other two can do the work while the incident is resolved.

Next in size is one of the centres that Microsoft will launch in Madrid, specifically in Meco (58.8 MW of installed power), which will be much larger than the other two that the company plans in that community: Algete (32 MW) and San Sebastián de los Reyes (7.8 MW).

Curiously, always according to the data provided by the company to the authorities, the one in Algete will have a greater potential water consumption (34.6 million litres per year) than the one in Meco (24.4 million litres), despite the latter being larger.

There is still no public data on the three centres that Microsoft wants to build in Aragon, beyond the fact that they will entail an initial outlay of 6.69 billion euros .

When fully operational, the Big Tech facilities will consume up to 567 MW (that will be their installed power), the same as around 160,000 average Spanish homes, and around 645 million litres of water per year, the equivalent of what 14,000 people consume in one year.

This does not include the three data centres that Microsoft will build in Aragon, about which there is still no information.

There are other data centres located in Spain that, in terms of size, are comparable to or surpass those that the big technology companies will launch in the coming years. One of them is already in operation in Alcalá de Henares, Madrid, and until 2020 belonged to Telefónica.

The estimated water consumption for when phase five of its deployment is operational, theoretically from 2020 (without being owned by Telefónica anymore), is a maximum of 85 million litres per year, according to what EL PAÍS has been able to find out after making a request for public information to the Community of Madrid.

That would make it the thirstiest data centre in Spain today, an honour that it will lose when the Talavera centre is launched. In terms of installed capacity, the largest of the assets (200 MW) belongs to Solaria Energía and is in Puertollano, Ciudad Real (Castilla-La Mancha), according to data from the employers’ association Spain DC.

A growing demand

The Community of Madrid is currently the hub of data centres in Spain, with an installed capacity of around 164 MW, according to data from the real estate investment firm Colliers. Is that a lot or a little? Not too much, if compared to the main European regions: London (979 MW), Frankfurt (722 MW),

Amsterdam (496 MW) and Paris (416 MW) far outnumbered it at the end of 2023, according to a report by the real estate consultancy JLL . Colliers estimates that, once all the planned facilities are operational, Madrid will reach 792 MW.

In any case, some experts consulted by this newspaper believe that AWS and Microsoft will turn Aragon into the region with the most data centres in Spain. It is already being called the “Virgin of Europe”, in reference to the US state that has the most facilities of this type.

Only those planned by AWS exceed 250 MW of installed power (the size of the centres that Microsoft will build there is still unknown). Colliers analysts calculate that, if all the committed projects are executed, including those of AWS and Microsoft, Aragon could reach 1,800 MW in the next few years, which would place it as the most important region in Spain.

Other sources, however, believe that Madrid will not lose the lead. Not only because of the nearly 100 MW of installed power that Microsoft has already said it will add, but because, by the time the Aragonese AWS and Microsoft centres are operational, it is likely that several of the Madrid centres will expand their capacities and new ones will open.

This is just the beginning

The emergence of generative AI has turned the industry upside down. Traditional data centres are not suitable for training and supporting the gigantic models behind ChatGPT, Llama, Copilot, Gemini or Claude.

Higher density processors are needed, which means more energy and water consumption. “Air cooling systems are not efficient with high-density racks , which are used to train AI models, but instead require much more energy.

That is why today’s large data centres are moving from air to water,” says Lorena Jaume-Palasí, a technology expert and advisor to the European Parliament on AI.

None of the AI developers have revealed the water cost of this technology. We only have estimates. The ones that serve as a reference are those of Shaolei Ren, associate professor of electrical and computer engineering at the University of California, Riverside.

He estimates that training the first ChatGPT cost about 700,000 liters of water and, as he recently published, the development of the GPT-3 model consumed four times more than estimated. Making between one and 50 queries to the chatbot costs about two liters of water, and not half a liter, as he initially estimated.

These figures will fall short with the GPT-4 version, already in use, and with GPT-5, theoretically in the development phase. “Moving to cooling methods that do not use water is easy to say, but difficult to do,” says Ren.